How Do AI Detectors Work?

Have you ever wondered how someone might tell if a piece of writing was generated by an AI or by a human? That’s exactly what AI content detectors aim to do.

These tools analyze a given text and look for subtle clues that suggest whether it was machine-made or human-written. The process involves a mix of advanced machine learning, linguistic analysis, and a bit of statistical “sniffing out” of patterns.

Understanding AI Content Detectors

At their core, AI content detectors are trained algorithms. Developers feed them huge amounts of example text – some written by humans, and some produced by AI models – so the detector can learn the differences.

Over time, the detector picks up on certain patterns that tend to distinguish human writing from AI-generated text. Once trained, the detector can take a new piece of writing and compare its style and characteristics against those learned patterns to make an educated guess about its origin.

Unlike a simple plagiarism checker (which might look for exact copied phrases), AI detectors are more nuanced. They inspect how the text is written rather than what it’s saying.

In other words, they care more about the writing style and statistical fingerprints than about the topic or factual content.

This is because AI-generated text often has telltale signs in its structure and word usage that careful analysis can catch.

Key Techniques and Clues Used by Detectors

1. Perplexity – The Predictability of the Text

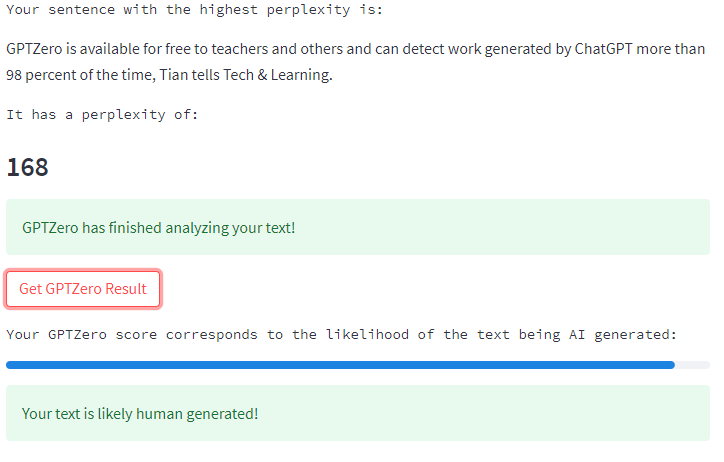

One of the main clues detectors examine is called perplexity. This concept measures how predictable the next word in a sentence is. Human writing usually includes a mix of unsurprising phrases and creative, unusual word choices.

AI-generated text, on the other hand, can be too predictable. If every sentence flows in a highly probable, almost mechanical way, with very expected word choices, a detector sees that as a sign that an AI might have written it.

Low perplexity (meaning the text was very predictable) often raises a red flag for being AI-generated.

2. Burstiness – Variation in Style

Human writers have quirks; they might write one long, complex sentence followed by a short punchy one. They might shift tone or throw in a colloquial phrase here or there. This leads to what detectors call higher burstiness – essentially, variability in sentence lengths and structures.

AI models, trying to be consistent, often produce more uniform sentences and a steadier tone throughout. If a text lacks that natural variation – if it reads every sentence in a similar rhythm and length – a detector notes that as unusual and possibly artificial.

3. Repetition and Redundancy

AI content can sometimes get a bit repetitive. Because AI models learned from massive amounts of data, they have a tendency to use common phrases or repeat information, especially if trying to hit a word count.

Detectors scan for repeated patterns of words (so-called n-grams or common sequences of terms). For example, if the phrase “In conclusion, it is important to note that…” appears over and over, that repetitive structure might indicate an AI was at work (since humans usually vary their wording more).

4. Consistent Vocabulary and Tone

Human writing often has little inconsistencies – maybe using a fancy term in one paragraph and a casual slang in another, or varying the level of detail depending on mood or focus. AI-generated text often maintains a very consistent vocabulary and tone all the way through.

If every sentence is at the same reading level and there are no informal asides or odd little interjections that humans might include, detectors take note. An oddly uniform complexity or tone can look suspiciously non-human.

5. Machine Learning Classification

Beyond these stylistic clues, modern AI detectors often employ dedicated machine learning models under the hood.

These models are classifiers trained specifically to tell AI and human writing apart. They consider a multitude of features (including things like perplexity, burstiness, vocabulary use, etc.) all at once to output a probability or score.

For instance, the detector might finally say “there’s an 85% chance this text is AI-generated.” The inner workings involve neural networks comparing the text to everything they learned during training.

6. Semantic Analysis

Some advanced detectors also use something called semantic embeddings – basically converting sentences into numerical vectors that capture meaning. By doing this, they can compare the meaning and context of your text to known AI-written texts.

If your text’s semantic profile closely matches what the AI examples look like (even if the words differ), that can be an additional hint. It’s like understanding the writing on a deeper level: not just surface patterns, but the overall essence and flow of ideas, and seeing if that essence aligns more with machine or human style.

What Happens After Analysis: Scoring and Results

Once an AI detector has crunched all these numbers and patterns, it typically gives a result in the form of a score or a label.

You might see something like “90% likely to be AI-generated” or perhaps a simpler verdict like “The tool thinks this is AI-written.”

It’s important to remember these scores are about likelihood, not certainty. For example, a 60% AI-likely score means the system has somewhat more evidence pointing to AI than human, but it’s not absolutely sure.

Many detectors provide a visual breakdown, highlighting specific sentences they think are dubious. For instance, one sentence might be flagged as “too predictable” or another as “highly repetitive.” This forensic approach helps users understand why the detector came to its conclusion.

Some tools simplify things to categories – for example, outputting labels such as “Highly likely AI,” “Unclear/Mixed,” or “Likely Human.” These categories are based on threshold scores. So if a piece scores above, say, 80% AI-likely, it might just stamp it as “AI” for simplicity.

Can AI Detectors Be Fooled?

AI detectors, while clever, are not foolproof. Just as viruses and antivirus software are in a constant arms race, AI writing tools and detection tools are continually evolving.

A creative human can intentionally edit AI-generated text to make it more human-like (by adding slang, making sentences choppy, injecting a few typos or quirks).

Such modifications can throw off a detector. In fact, even without human intervention, newer AI models are getting better at mimicking human style, making the detector’s job harder.

False positives and false negatives do happen. A false positive is when the detector wrongly tags a human-written piece as AI-generated (imagine a student being falsely accused of using AI on an essay they wrote themselves – unfortunately, cases like this have occurred).

A false negative is the opposite – AI-written text that slips through labeled as “human.” Because of these possibilities, experts caution against using detector results as the sole proof. They are one indicator, not a courtroom verdict.

Notably, OpenAI itself released an AI-writing detector in early 2023, only to withdraw it later due to low accuracy. It was only catching about 1 out of 4 instances of AI writing, which shows how challenging this problem is, even for the creators of the AI.

The Bottom Line: Use Detectors Wisely

AI content detectors are fascinating and useful tools, especially for educators checking student work or publishers wanting to know if an article was auto-generated. They work by examining the DNA of the writing – its predictability, variety, and other patterns – much like a language CSI unit. However, their verdicts should be taken with a grain of salt. Think of them as a highly trained sniffer dog: they can point you in a direction, but a human still needs to judge the context.

If you use an AI detector, it’s best to treat the results as one piece of evidence. Combine it with your own judgment about the content quality. Does the text have factual errors or a strangely consistent tone? Those human common-sense factors, together with the detector’s technical analysis, will give you the best overall picture.

.svg)

.png)

%20(2).png)

%20(2).png)

%20(2).png)